Heuristic Survey and Feedback Framework Design

Introduction and Overview

Our client asked us to design a user survey and accompanying analytics dashboard for their product development tool.

Along the way, however, we created an innovative, heuristic-based feedback evaluation framework that delivers actionable insights for early feature and application development.

Our Client

Parlay is a product development startup which offers a tool that gives developers the ability to anticipate the impact of new features without first having to invest in expensive development. It engages users to take part in the development process, allowing designers and engineers to stay connected with their customers as they grow their products.

Parlay:

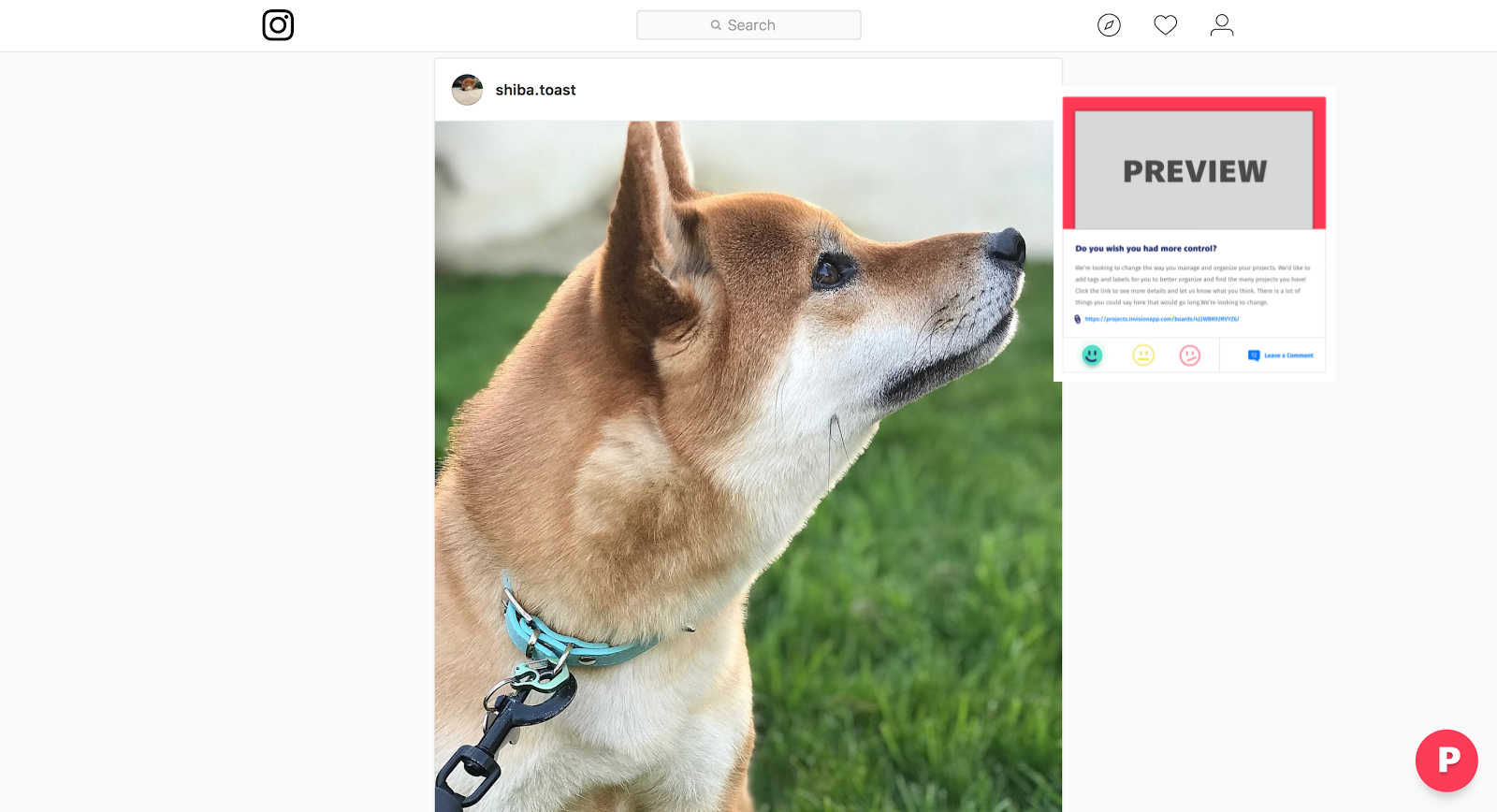

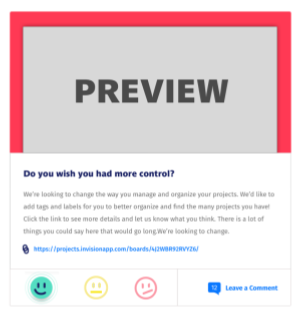

Users of websites and applications which have implemented Parlay - and who are given the opportunity to opt into the program - are presented with modal previews of proposed features along with an invitation to provide feedback via a short survey. This information is collected and provided to the website or application administrators.

This is Bub. He's Parlay's robot mascot.

Process

Discovery

Competitive Analysis

Parlay's concept is the product of years of industry experience developing products and features. They feel no individual tool provides prototyping functions, while also offering meaningful insights that will help developers and designers make informed decisions without committing extensive resources.

In the absence of direct competition, we resorted to indirect competitive analysis in order to build a relevant picture of the industry, and justify our design choices and deliverables strategy.

We selected six products and companies that were relevant to our project scope based on their comparable offerings. These products presented as indirect competitors because none of them offer the contextual feature previews, which are Parlay's bread and butter.

Based on our competitive research, we concluded that our surveys and analytics should accommodate A/B testing and sentiment-specific analysis. Meanwhile, our dashboard should benefit from the existing standards and conventions that inform analytics suites around the industry.

Dashboards around the Development and analytics INdustry

User Interviews

We spoke to 12 industry experts who have extensive experience and represent all points of the development process, from engineer to designer to project manager.

We asked these experts about their development process and what elements they feel are most important in the early and late design stages. We also talked to them about the place feedback and analytics hold in their design process.

Based on our conversation, we identified four major stakeholders in the product development process with disparate wants and needs when it comes to feedback tools. We knew we would want to address each of these needs as much as possible in our surveys, dashboard, or both.

Personas

We used this research to develop a trio of personas that we relied on as we designed our analytics dashboard, our survey flows, and the framework we would eventually base all of our work on.

Conclusions

Combining our competitive analysis, user interviews, and personas, we concluded that our surveys would need to be quick and conversational in order to avoid asking too much of otherwise-engaged users.

Meanwhile, the analytics would need to provide quick and easy-to-transcribe findings that product developers could easily share with stakeholders. Those findings should provide actionable insights that product teams could use to inform their decision making.

Problem Statement

As we dove deeper into our research, we determined that our real challenge was even greater than we initially envisioned. While the design of a simple survey and dashboard seemed relatively straightforward, our client's vision called for something that would truly set their tool apart from their competition.

Product teams often have trouble anticipating their users’ wants and needs - and speaking about those wants and needs in a common language.

How do we help developers and admins make informed decisions based on speculative data?

One key lay in the requirement that our solutions accommodate those actionable insights. By their very nature, as part of a digital prototyping development tool, our surveys would be asking customers to rate features that haven't been made yet.

How do we provide a standard set of criteria that Parlay's customers - developers, designers, product managers, etc. - can all easily adopt and understand?

The second key lay in the challenge of ensuring our solution appealed to many different kinds of users, with varying backgrounds and design and development knowledge. Our solution would need to be universally scaleable in order to offer credible and reliable feedback,

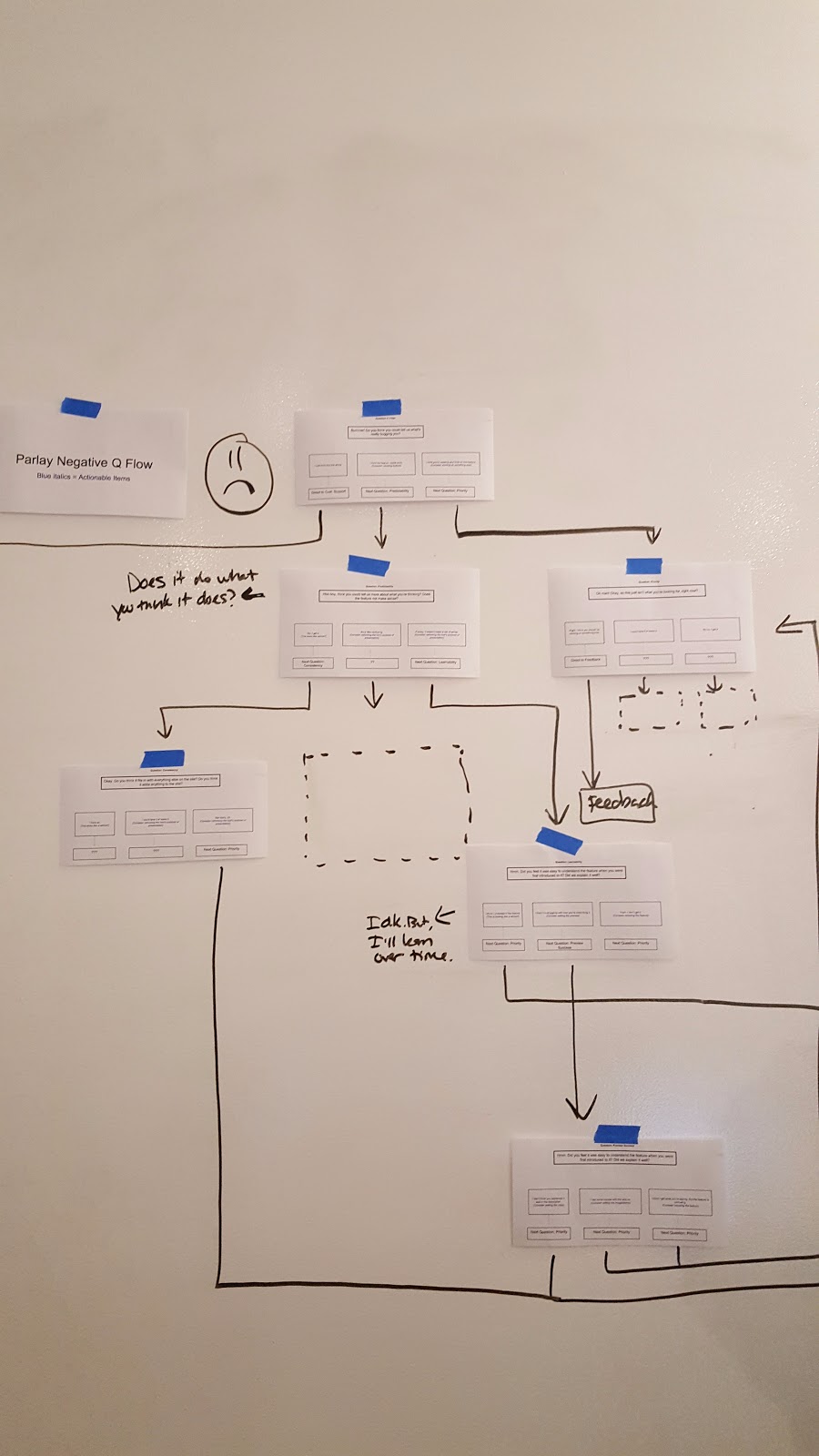

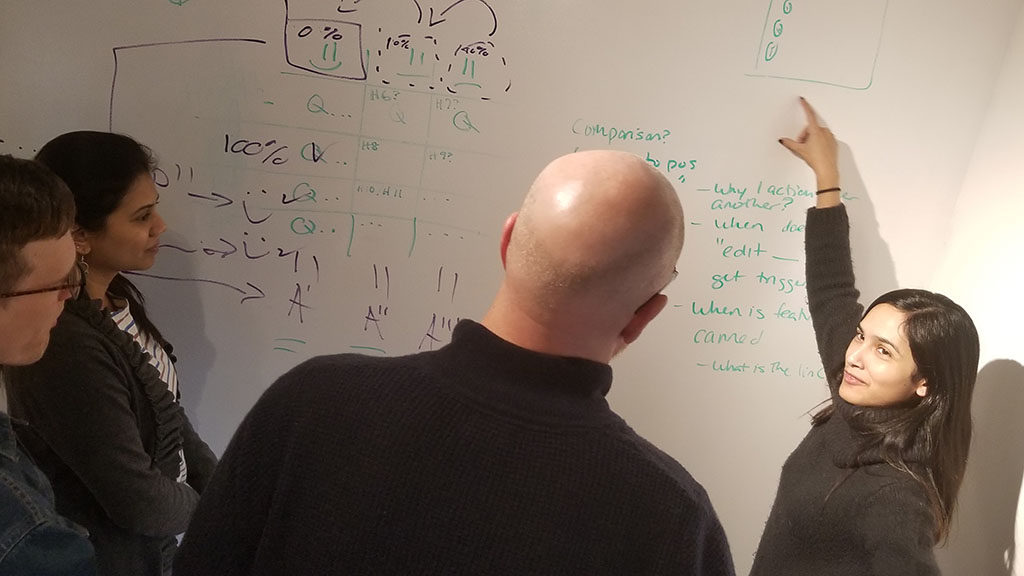

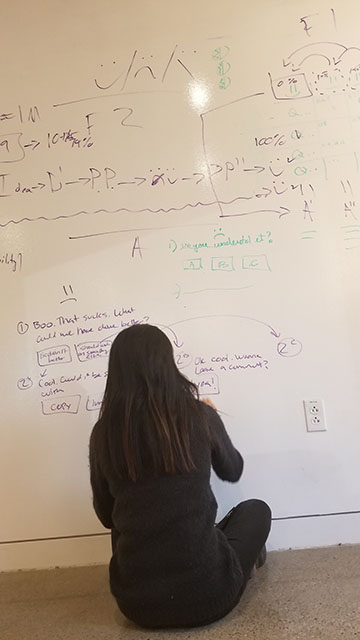

So we began to sketch.

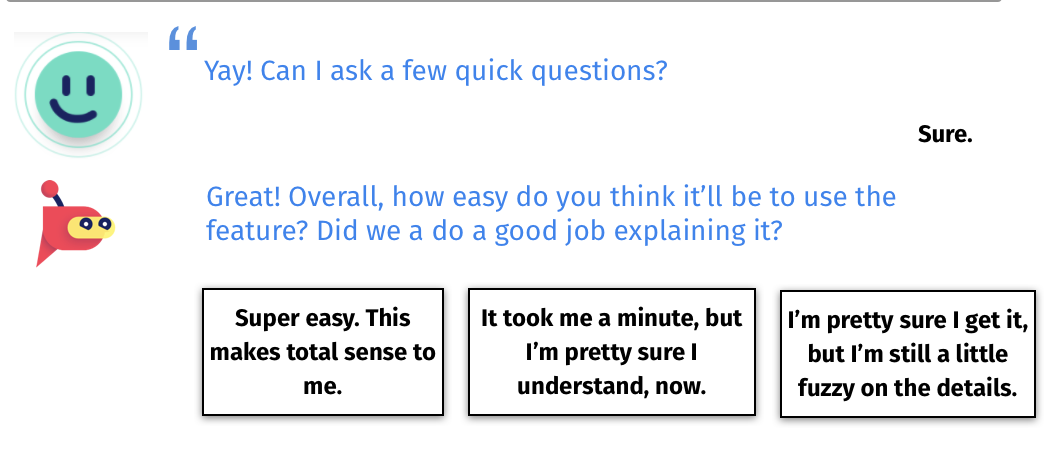

Initially, we endeavored to hash out a chat-bot flow scheme. We thought about how we might arrange and direct close-ended questions in a fashion that would consistently provide useful data.

At the same time, we started to think about what our questions would be. Parlay uses the Customer Happiness Index (CHI) to collect customer feedback, so we knew that we needed three surveys. One for each potential CHI sentiment: Positive, Neutral, and Negative.

I suggested we try employing a set of Usability Heuristics to help us get the ball rolling. This idea bore fruit, as this starting point gave us a point of reference that helped us define what information designers and developers might value when considering a proposed feature's viability.

Ultimately, it was this step that started us down the path of creating an entirely new way of collecting and synthesizing user feedback.

Heuristics

What are they?

Usability Heuristics in the design process represent an important tool for measuring how successful or effective a design is. Individual heuristics address different elements and issues, but they all aim to measure the degree to which the design achieves the goal its creator sought.

While the internet contains innumerable articles and white papers describing countless usability heuristics, the Nielsen Norman Group has defined some of the most widely adopted terms and definitions. For example:

Visibility of System Status

The system should always keep users informed about what is going on, through appropriate feedback within reasonable time.

Consistency and Standards

Users should not have to wonder whether different words, situations, or actions mean the same thing. Follow platform conventions.

Aesthetic and Minimalist Design

Dialogues should not contain information which is irrelevant or rarely needed. Every extra unit of information in a dialogue competes with the relevant units of information and diminishes their relative visibility.

(Images courtesy of UXIndia.com)

In considering the challenge of meeting our client's vision of providing Actionable Insights, we realized that heuristics potentially represent a perfect framework on which to base our survey and dashboard.

The Design Community

We scraped the Internet and put together a list of 50 heuristics and their definitions. We wanted to create a large list for our client as part of our deliverables. However, we also wanted to poll the design community to see what they had to say about the most universally relevant and important heuristics.

Because Parlay's customers might want to preview any type of feature or element on their product, we asked respondents to resist answering the question in the context of specific projects. We asked them, instead, to try to choose their favorites with as generic and unbiased a mindset as possible.

After close to 100 responses from designers around the world, the top five heuristics are:

Speculative Heuristics

With our ranked list of usability heuristics, we had an idea of what the design community as a whole feels are the most important metrics to consider when evaluating a product or website. As we contemplated our client's needs, however, it became clear that we were going to have to take the existing standards, and give them a tweak.

Because Parlay asks users to evaluate products and features which haven't been created yet, those meaningful, actionable insights which separate Parlay from its competition must be based on speculation.

As a result, we would have to rewrite the established conventions.

For example, in a typical feedback evaluation, one might ask: "Did you understand the feature?"

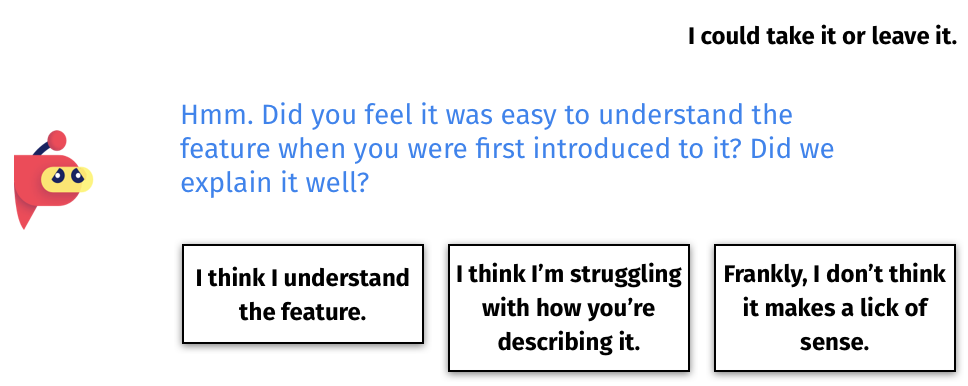

In our case, where the feature is little more than a proposal at the time of evaluation, we would have to ask the question more as, "Do you feel you would or could understand the feature?"

In order to recalibrate for this speculative setting, we conceived of a series of six heuristics that could be used to define the framework of both our survey and analytics suite, addressing the needs of Parlay's prospective users and encompassing the common considerations developers encounter when planning and evaluating features.

The Survey

With our custom heuristics in hand, we dove into the process of designing and writing our customer feedback surveys.

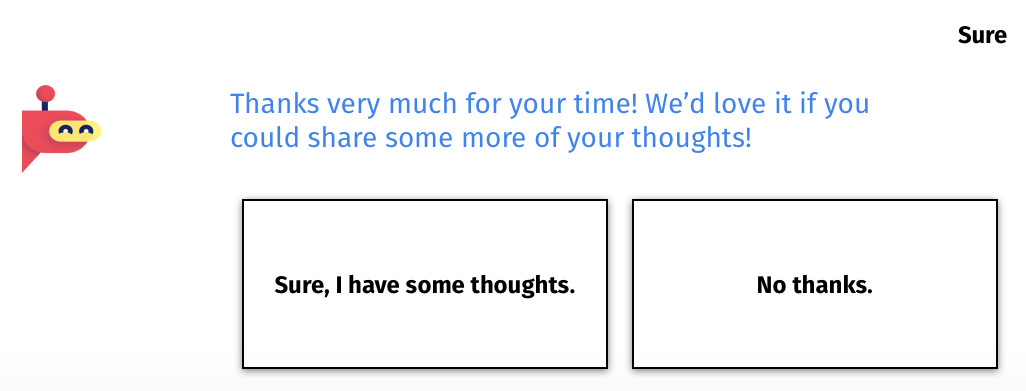

Based on our research and our conversations with the client, we knew the questions needed to balance a casual tone with clear and professional intent.

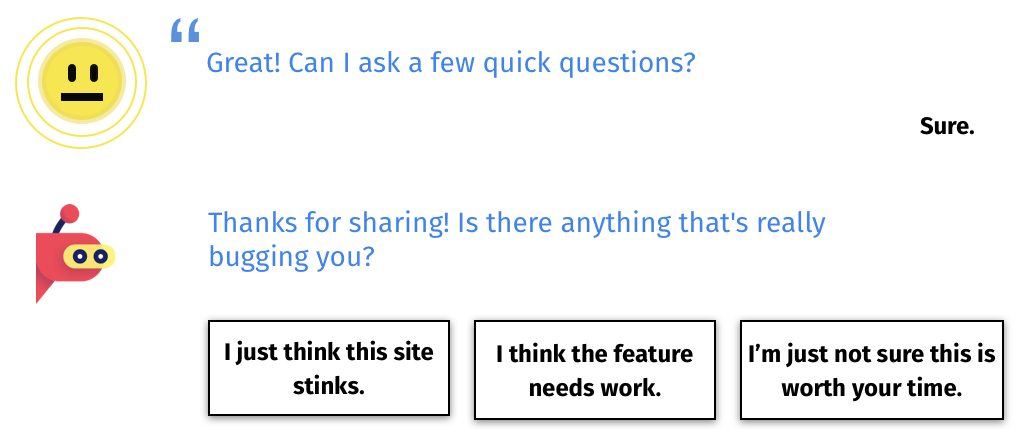

With our client's commitment to the CHI feedback system, we also knew that we would want three separate question flows in order to accommodate the Positive, Neutral, and Negative responses.

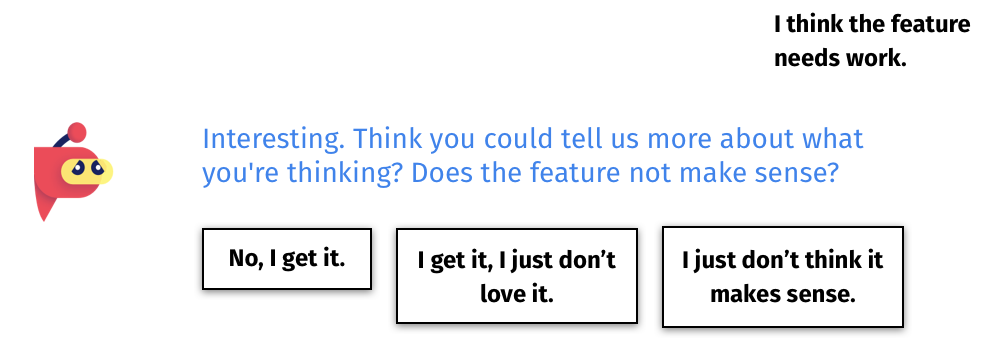

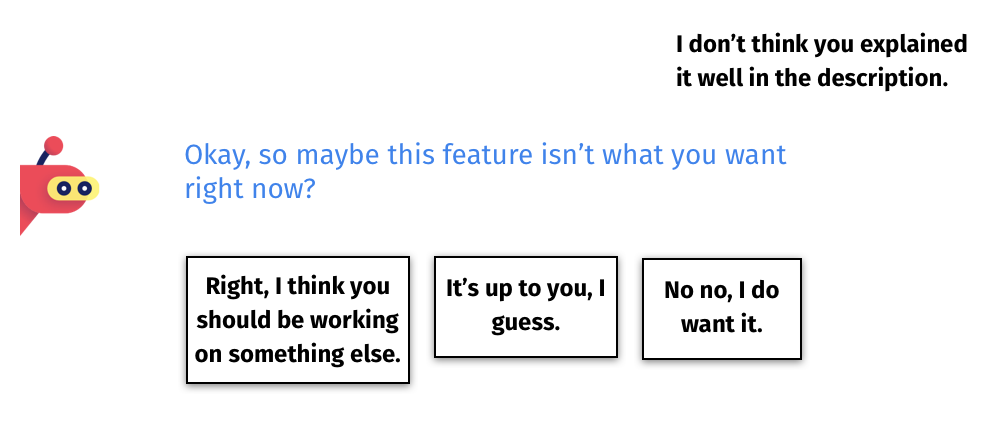

The three different survey flows would allow us to utilize different heuristics and help inform the questions we would select. For example, if a respondent indicates they don't like a feature, it wouldn't be useful to ask that respondent if they'd be willing to pay money for it.

We identified the heuristics that would best fit the three respective CHI sentiments and wrote questions which addressed them while remaining consistent with the tone established by the user in their initial CHI selection. We also endeavored to maintain the casual and conversational tone that the client established as part of their brand.

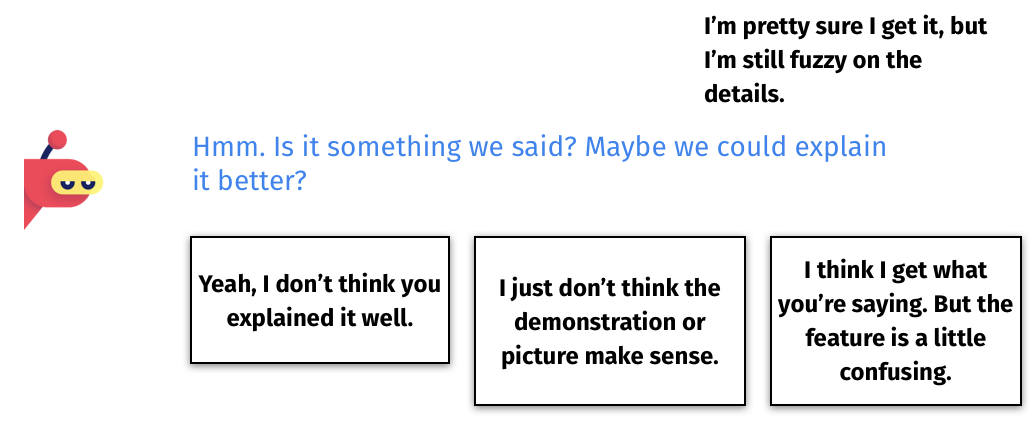

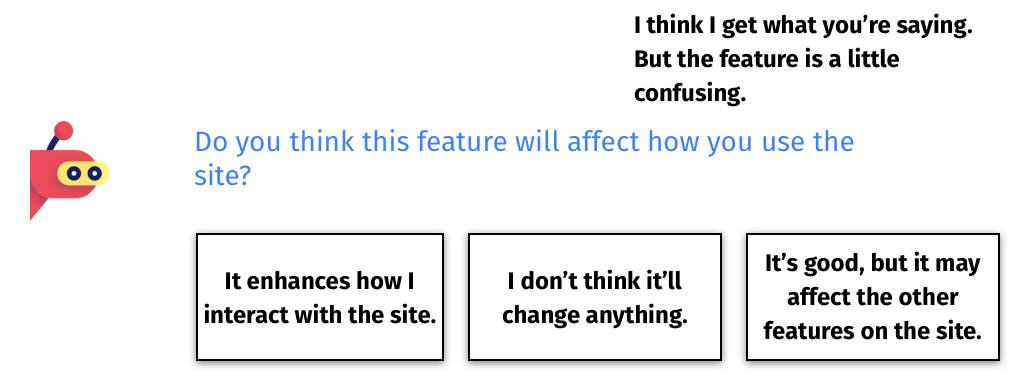

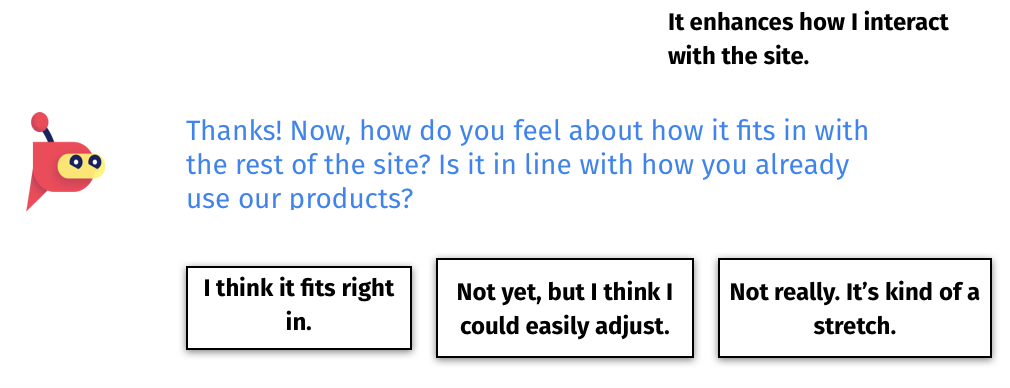

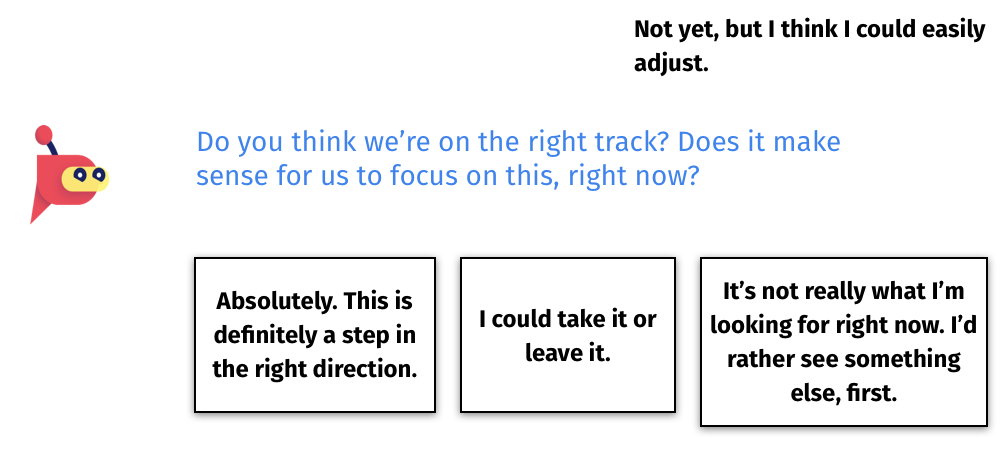

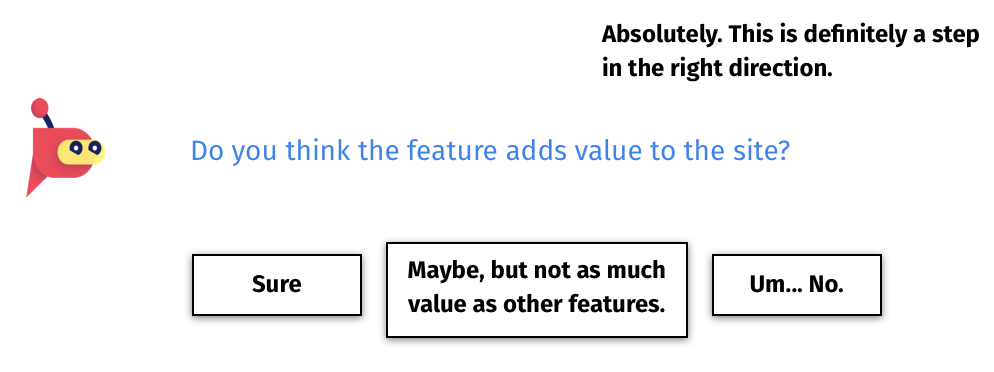

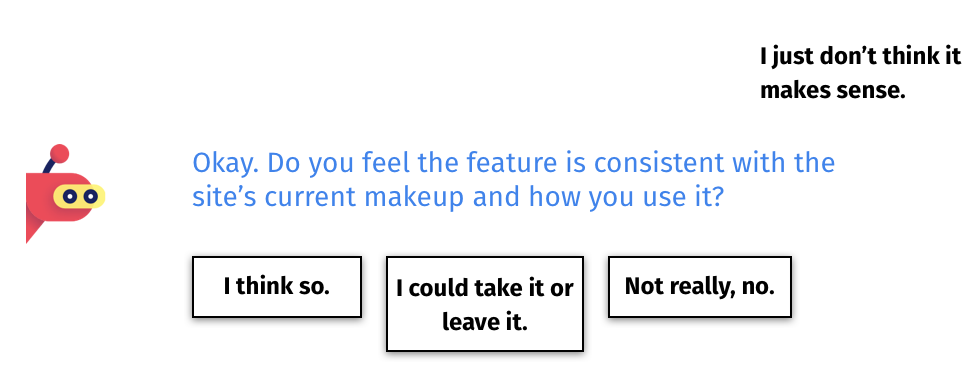

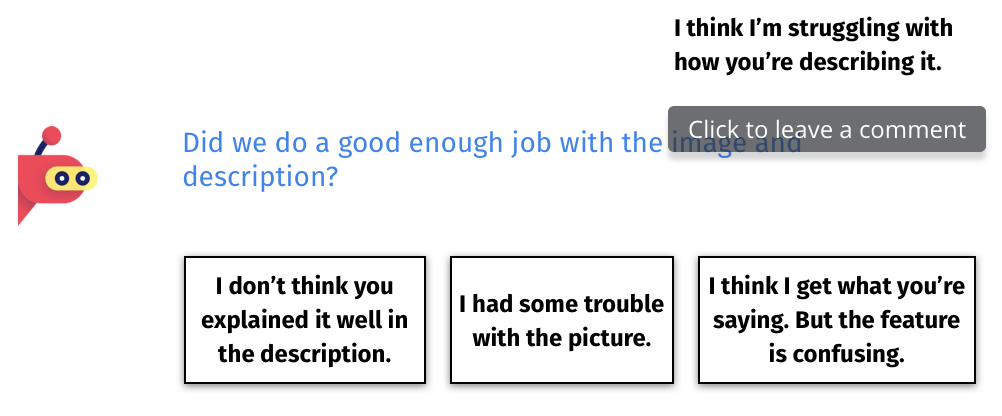

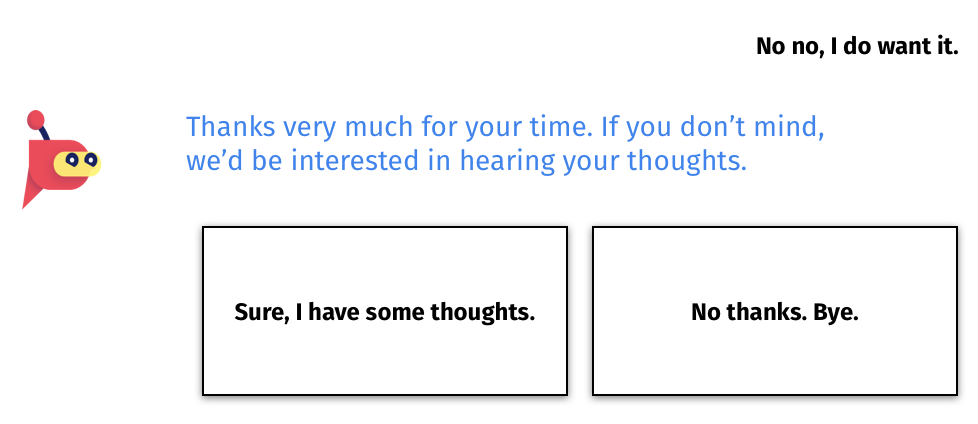

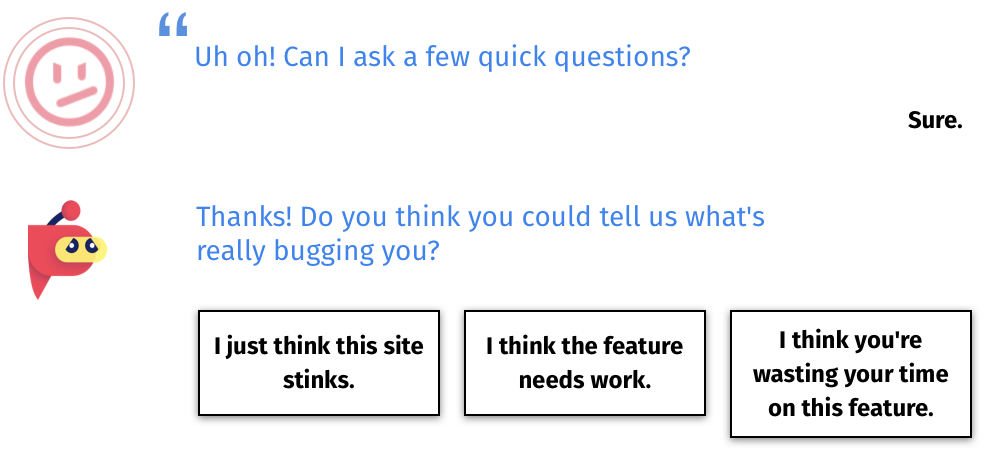

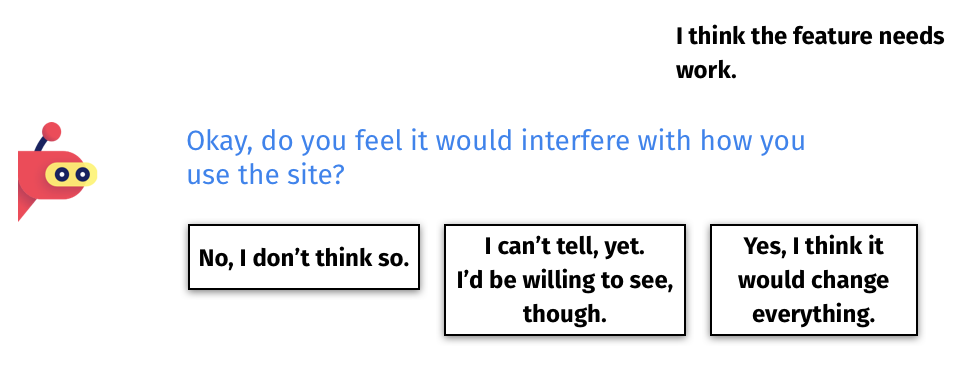

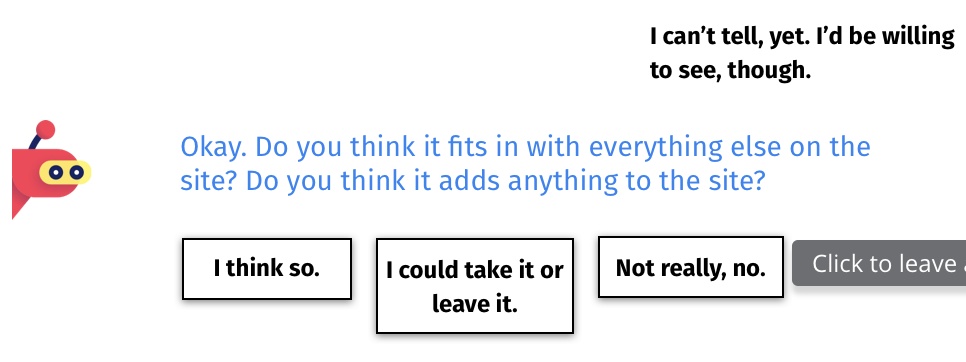

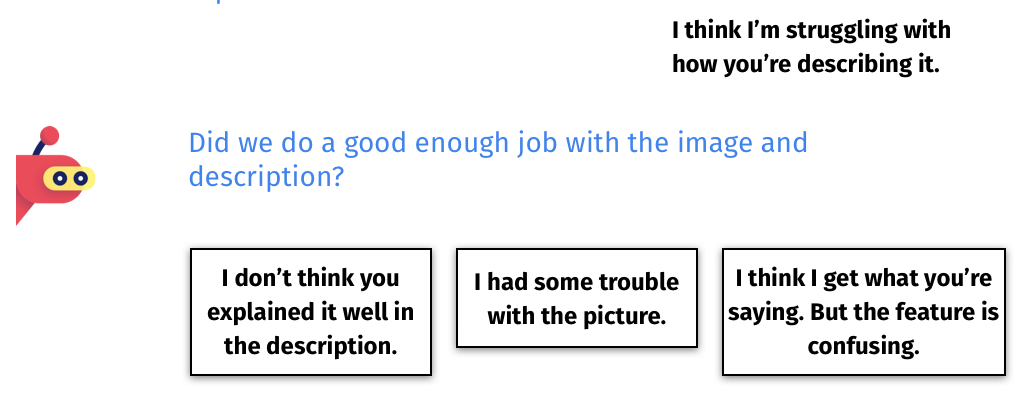

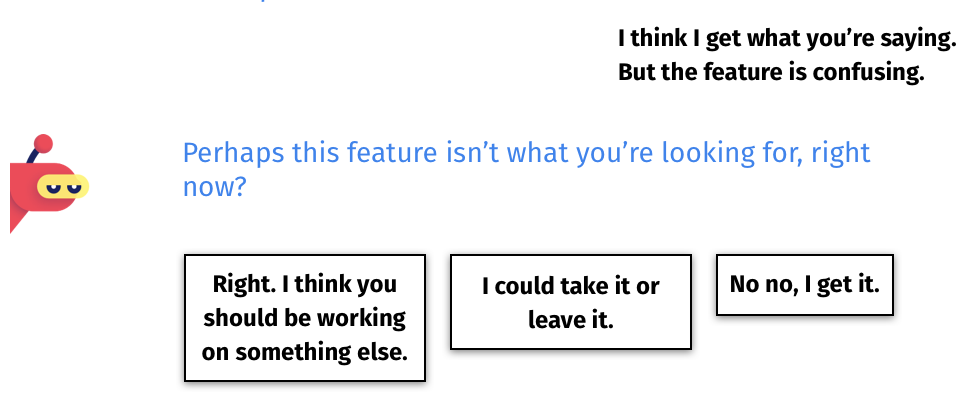

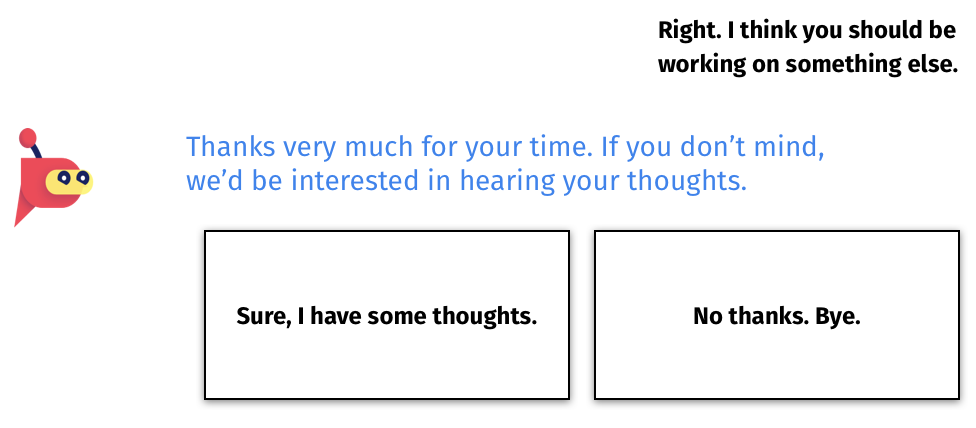

The following three flows show our proposed questions for each chatbot survey flow. Based on our research, each is tailored to provide the most useful data for each initial CHI response. The questions are designed to be as generic as possible, in order to accommodate any type of feature or element a customer may wish to test.

.The title of each slide represents the heuristic or logistical purpose of each questions.

Positive Survey Flow

Neutral Survey Flow

Negative Survey Flow

With our survey flows and questions designed and written, we turned next to the analytics dashboard.

The Analytics Dashboard

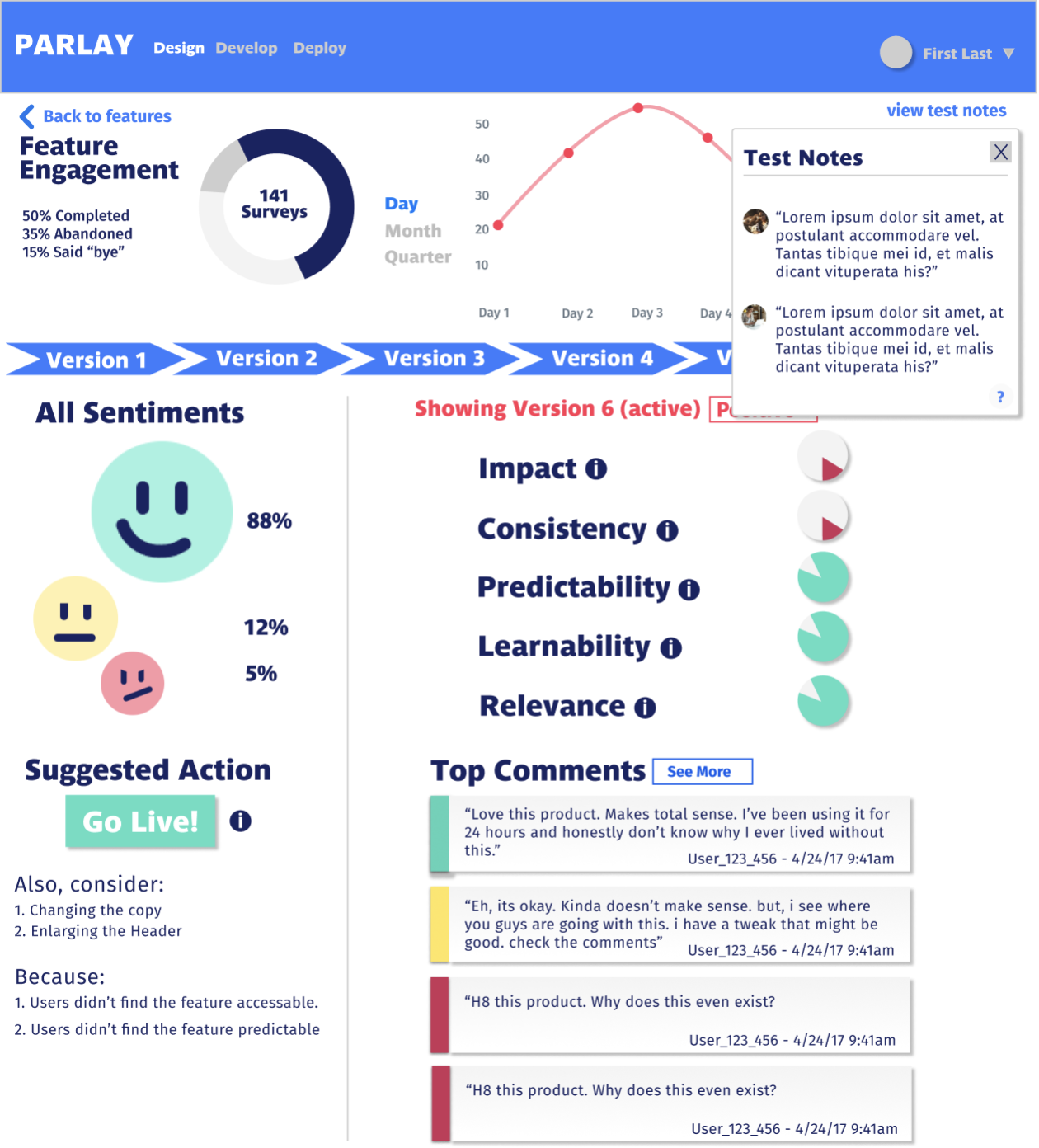

The goal of our analytics suite was to take advantage of our new heuristics framework, as well as the meaningful user feedback to be collected by our survey, and present actionable data and insights.

According to our interviews, this information would, ideally, be easily digested and shared with stakeholders.

Parlay's team defined "actionable insights" as informed, data-backed suggestions and recommendations that help their customers - admins, developers, designers, etc - make decisions about their feature development strategies.

Our dashboard would be able to recommend that previewed features be edited or tweaked, redesigned, launched, or even abandoned.

The language should be clear, concise, and informative. Any data should be represented in a visually appealing and easily-digested fashion.

Harvey Balls

Harvey Balls

Early in our interviews with project managers and designers, we were introduced to the concept of Harvey Balls as common signifiers for presenting progress or degree of completion.

Because of the ease with which they can be used to synthesize user feedback data, we found they are also excellent candidates for quickly and efficiently demonstrating degrees of heuristic success.

It was a very simple proposal to assign point values to the responses provided to users in the chatbot survey. This means it would be even easier for Parlay's customers to calibrate their findings to suit their individual previews and projects.

We iterated on a number of designs that allowed us to give customers quick and clear recommendations, while simultaneously offering the opportunity to dig deeper into the data behind those recommendations.

Dashboard Mockup

Finally, our team dove into Figma and put together a mid-fidelity mockup of a dashboard that we presented to our client along with the rest of our findings. Screens from this mockup, including various features of the dashboard, can be seen below.

This prototype is designed to accommodate version history, a/b testing, and the incorporation of user comments imported from the discussion boards proposed by our client.

Controls are meant to be simple, with many elements including definitions and explanations upon a click.

Next Steps

Our team presented a complete set of deliverables to our client. However, due to the time constraints of the project there are some things that, if given the opportunity, we'd love to dive into further.

For example, it would be fascinating to continue researching the heuristics side of the project; surveying more designers, developers, and project managers.

Future development of Parlay's service may include providing customers' the ability to write and define their own heuristics, depending on what they determine is most important to their situation.

It would also be beneficial to do more user-facing research to further develop our conclusions about what design heuristics are the most pertinent and far-reaching.

In some testing, we found there was some confusion among developers about what heuristics are. So it would be useful to explore the onboarding and education steps that may help Parlay's customers better utilizeall that the tool has to offer.

Finally, it would be interesting to read and learn more about communication and customer feedback collection in order to produce better, more effective chatbot questions.

One of the team's early attempts to wrap their heads around Parlay and what its tool could mean for the design and development industry.

Conclusions

This was an exciting project to work on with an equally exciting new company whose product has the potential to change how product teams - from start ups to established companies - approach their development process.

Our initial task of creating a survey and analytics dashboard seemed straightforward, until we began to tackle the challenge of creating actionable and meaningful insights out of speculative data in a fashion that would be scaleable and relevant to any customer.

Our solution using custom heuristics informed by the design and development community is simple, elegant and, most importantly, versatile.

Initially, it was a challenge to find the User Experience side of this project. However, by leveraging the design process at this problem, and using what we know of experience research and design, we found this incredibly interesting solution that produced a very fulfilling experience.

Acknowledgements

My Teammates: Julie Vera, Ezra Tollett, Supriya Gawas

Team Parlay: Keith Frankel, Jonah Stuart, Jason Zopf

GA: Jason Reynolds